@J N S S Kasyap

The widgets are defined in the first cell of the notebook. Not all of them require external input, but some do. It looks like this:

dbutils.widgets.text("EnvName", "local")

dbutils.widgets.text("WheelFileName", "platform_tools-0.1.0-py3-none-any.whl")

dbutils.widgets.text("BasePath", "/Volumes/bdp_ont/bronze_landing/bronze_landing/")

dbutils.widgets.text("EntityName", "instrumenttransaction")

dbutils.widgets.text("MasterRunId", "000000-0000-0000-0000")

dbutils.widgets.text("RunId", "1")

dbutils.widgets.text("SourceName", "prime")

dbutils.widgets.dropdown("Debug", "False", ["True", "False"])

In a later cell they are retrieved:

wheel_file_name = dbutils.widgets.get("WheelFileName")

source_name = dbutils.widgets.get("SourceName")

entity_name = dbutils.widgets.get("EntityName")

base_path = dbutils.widgets.get("BasePath")

master_run_id = dbutils.widgets.get("RunId")

debug = dbutils.widgets.get("Debug")

env_name = dbutils.widgets.get("EnvName")

Now I'm quite certain that this is not the issue, because the API call to the job passed the parameters just fine.

To be sure, this is the json of the pipeline with only the jobs activity:

{

"name": "13_test",

"properties": {

"activities": [

{

"name": "Job1",

"type": "DatabricksJob",

"dependsOn": [],

"policy": {

"timeout": "0.12:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [],

"typeProperties": {

"jobId": "812604096617191",

"jobParameters": {

"EntityName": {

"value": "@pipeline().parameters.Entity",

"type": "Expression"

},

"EnvName": "ont",

"MasterRunId": {

"value": "@pipeline().RunId",

"type": "Expression"

},

"SourceName": {

"value": "@pipeline().parameters.source",

"type": "Expression"

}

}

},

"linkedServiceName": {

"referenceName": "AzureDatabricks1",

"type": "LinkedServiceReference"

}

}

],

"parameters": {

"source": {

"type": "string",

"defaultValue": "eucrisk"

},

"Entity": {

"type": "string",

"defaultValue": "targetlimit"

},

"DestinationFolderDB": {

"type": "string",

"defaultValue": "bronze_landing"

}

},

"folder": {

"name": "10_extract"

},

"annotations": []

}

}

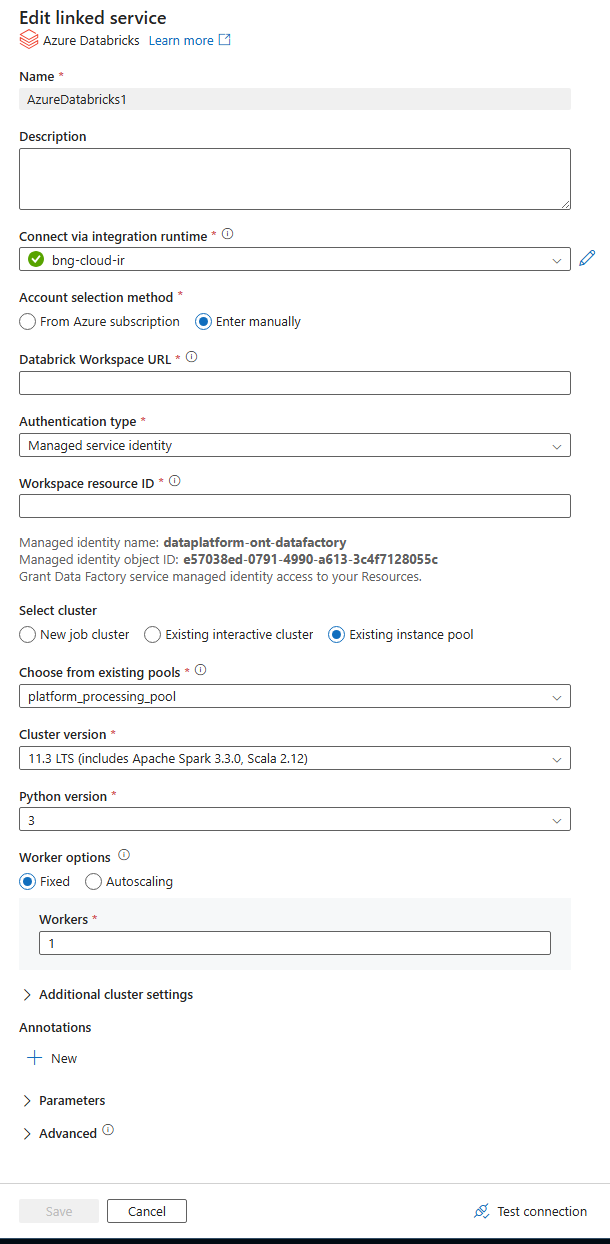

The IR is hosted on azure. I use the same IR for the API call. Here is an image with the settings. I removed the workspace url and workspace resource id.

Here is the code for the API call that does pass the parameters.

{

"name": "Trigger Databricks Job run",

"description": "fire & forget",

"type": "WebActivity",

"state": "Active",

"onInactiveMarkAs": "Succeeded",

"dependsOn": [],

"policy": {

"timeout": "0.12:00:00",

"retry": 3,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [],

"typeProperties": {

"method": "POST",

"url": {

"value": "@concat(\n pipeline().globalParameters.DatabricksWorkspaceURL,\n '/api/2.1/jobs/run-now'\n)",

"type": "Expression"

},

"connectVia": {

"referenceName": "bng-cloud-ir",

"type": "IntegrationRuntimeReference"

},

"body": {

"value": "{\n \"job_id\": \"@{pipeline().globalParameters.DatabricksJobId}\",\n \"job_parameters\": {\n \"EntityName\": \"@{pipeline().parameters.Entity}\",\n \"EnvName\": \"@{pipeline().globalParameters.Tier}\",\n \"MasterRunId\": \"@{pipeline().parameters.MasterRunId}\",\n \"SourceName\": \"@{pipeline().parameters.source}\"\n }\n}",

"type": "Expression"

},

"authentication": {

"type": "MSI",

"resource": "2ff814a6-3304-4ab8-85cb-cd0e6f879c1d"

}

}

}