Portal:Computer programming

Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application ___domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

-

Image 1The Antikythera mechanism (/ˌæntɪkɪˈθɪərə/ AN-tik-ih-THEER-ə, US also /ˌæntaɪkɪˈ-/ AN-ty-kih-) is an ancient Greek hand-powered orrery (model of the Solar System). It is the oldest known example of an analogue computer. It could be used to predict astronomical positions and eclipses decades in advance. It could also be used to track the four-year cycle of athletic games similar to an olympiad, the cycle of the ancient Olympic Games.

The artefact was among wreckage retrieved from a shipwreck off the coast of the Greek island Antikythera in 1901. In 1902, during a visit to the National Archaeological Museum in Athens, it was noticed by Greek politician Spyridon Stais as containing a gear, prompting the first study of the fragment by his cousin, Valerios Stais, the museum director. The device, housed in the remains of a wooden-framed case of (uncertain) overall size 34 cm × 18 cm × 9 cm (13.4 in × 7.1 in × 3.5 in), was found as one lump, later separated into three main fragments which are now divided into 82 separate fragments after conservation efforts. Four of these fragments contain gears, while inscriptions are found on many others. The largest gear is about 13 cm (5 in) in diameter and originally had 223 teeth. All these fragments of the mechanism are kept at the National Archaeological Museum, along with reconstructions and replicas, to demonstrate how it may have looked and worked.

In 2005, a team from Cardiff University led by Mike Edmunds used computer X-ray tomography and high resolution scanning to image inside fragments of the crust-encased mechanism and read the faintest inscriptions that once covered the outer casing. These scans suggest that the mechanism had 37 meshing bronze gears enabling it to follow the movements of the Moon and the Sun through the zodiac, to predict eclipses and to model the irregular orbit of the Moon, where the Moon's velocity is higher in its perigee than in its apogee. This motion was studied in the 2nd century BC by astronomer Hipparchus of Rhodes, and he may have been consulted in the machine's construction. There is speculation that a portion of the mechanism is missing and it calculated the positions of the five classical planets. The inscriptions were further deciphered in 2016, revealing numbers connected with the synodic cycles of Venus and Saturn. (Full article...) -

Image 2

Andrew Stuart Tanenbaum (born March 16, 1944), sometimes referred to by the handle AST, is an American-born Dutch computer scientist and retired professor emeritus of computer science at the Vrije Universiteit Amsterdam in the Netherlands.

He is the author of MINIX, a free Unix-like operating system for teaching purposes, and has written multiple computer science textbooks regarded as standard texts in the field. He regards his teaching job as his most important work. Since 2004 he has operated Electoral-vote.com, a website dedicated to analysis of polling data in federal elections in the United States. (Full article...) -

Image 3

The source code for a computer program in C. The gray lines are comments that explain the program to humans. When compiled and run, it will give the output "Hello, world!".

A programming language is an artificial language for expressing computer programs.

Programming languages typically allow software to be written in a human readable manner.

Execution of a program requires an implementation. There are two main approaches for implementing a programming language – compilation, where programs are compiled ahead-of-time to machine code, and interpretation, where programs are directly executed. In addition to these two extremes, some implementations use hybrid approaches such as just-in-time compilation and bytecode interpreters. (Full article...) -

Image 4

tcsh and sh shell windows on a Mac OS X Leopard desktop

A Unix shell is a shell that provides a command-line user interface for a Unix-like operating system. A Unix shell provides a command language that can be used either interactively or for writing a shell script. A user typically interacts with a Unix shell via a terminal emulator; however, direct access via serial hardware connections or Secure Shell are common for server systems. Although use of a Unix shell is popular with some users, others prefer to use a windowing system such as desktop Linux distribution or macOS instead of a command-line interface.

A user may have access to multiple Unix shells with one configured to run by default when the user logs in interactively. The default selection is typically stored in a user's profile; for example, in the local passwd file or in a distributed configuration system such as NIS or LDAP. A user may use other shells nested inside the default shell.

A Unix shell may provide many features including: variable definition and substitution, command substitution, filename wildcarding, stream piping, control flow structures (condition-testing and iteration), working directory context, and here document. (Full article...) -

Image 5

Tcl (pronounced "tickle" or "TCL"; originally Tool Command Language) is a high-level, general-purpose, interpreted, dynamic programming language. It was designed with the goal of being very simple but powerful. Tcl casts everything into the mold of a command, even programming constructs like variable assignment and procedure definition. Tcl supports multiple programming paradigms, including object-oriented, imperative, functional, and procedural styles.

It is commonly used embedded into C applications, for rapid prototyping, scripted applications, GUIs, and testing. Tcl interpreters are available for many operating systems, allowing Tcl code to run on a wide variety of systems. Because Tcl is a very compact language, it is used on embedded systems platforms, both in its full form and in several other small-footprint versions.

The popular combination of Tcl with the Tk extension is referred to as Tcl/Tk (pronounced "tickle teak"[citation needed] or "tickle TK") and enables building a graphical user interface (GUI) natively in Tcl. Tcl/Tk is included in the standard Python installation in the form of Tkinter. (Full article...) -

Image 6Java is a high-level, general-purpose, memory-safe, object-oriented programming language. It is intended to let programmers write once, run anywhere (WORA), meaning that compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax of Java is similar to C and C++, but has fewer low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as reflection and runtime code modification) that are typically not available in traditional compiled languages.

Java gained popularity shortly after its release, and has been a popular programming language since then. Java was the third most popular programming language in 2022[update] according to GitHub. Although still widely popular, there has been a gradual decline in use of Java in recent years with other languages using JVM gaining popularity.

Java was designed by James Gosling at Sun Microsystems. It was released in May 1995 as a core component of Sun's Java platform. The original and reference implementation Java compilers, virtual machines, and class libraries were released by Sun under proprietary licenses. As of May 2007, in compliance with the specifications of the Java Community Process, Sun had relicensed most of its Java technologies under the GPL-2.0-only license. Oracle, which bought Sun in 2010, offers its own HotSpot Java Virtual Machine. However, the official reference implementation is the OpenJDK JVM, which is open-source software used by most developers and is the default JVM for almost all Linux distributions. (Full article...) -

Image 7

C++ is a high-level, general-purpose programming language created by Danish computer scientist Bjarne Stroustrup. First released in 1985 as an extension of the C programming language, adding object-oriented (OOP) features, it has since expanded significantly over time adding more OOP and other features; as of 1997[update]/C++98 standardization, C++ has added functional features, in addition to facilities for low-level memory manipulation for systems like microcomputers or to make operating systems like Linux or Windows, and even later came features like generic programming (through the use of templates). C++ is usually implemented as a compiled language, and many vendors provide C++ compilers, including the Free Software Foundation, LLVM, Microsoft, Intel, Embarcadero, Oracle, and IBM.

C++ was designed with systems programming and embedded, resource-constrained software and large systems in mind, with performance, efficiency, and flexibility of use as its design highlights. C++ has also been found useful in many other contexts, with key strengths being software infrastructure and resource-constrained applications, including desktop applications, video games, servers (e.g., e-commerce, web search, or databases), and performance-critical applications (e.g., telephone switches or space probes).

C++ is standardized by the International Organization for Standardization (ISO), with the latest standard version ratified and published by ISO in October 2024 as ISO/IEC 14882:2024 (informally known as C++23). The C++ programming language was initially standardized in 1998 as ISO/IEC 14882:1998, which was then amended by the C++03, C++11, C++14, C++17, and C++20 standards. The current C++23 standard supersedes these with new features and an enlarged standard library. Before the initial standardization in 1998, C++ was developed by Stroustrup at Bell Labs since 1979 as an extension of the C language; he wanted an efficient and flexible language similar to C that also provided high-level features for program organization. Since 2012, C++ has been on a three-year release schedule with C++26 as the next planned standard. (Full article...) -

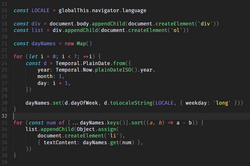

Image 8

JavaScript (JS) is a programming language and core technology of the web platform, alongside HTML and CSS. Ninety-nine percent of websites on the World Wide Web use JavaScript on the client side for webpage behavior.

Web browsers have a dedicated JavaScript engine that executes the client code. These engines are also utilized in some servers and a variety of apps. The most popular runtime system for non-browser usage is Node.js.

JavaScript is a high-level, often just-in-time–compiled language that conforms to the ECMAScript standard. It has dynamic typing, prototype-based object-orientation, and first-class functions. It is multi-paradigm, supporting event-driven, functional, and imperative programming styles. It has application programming interfaces (APIs) for working with text, dates, regular expressions, standard data structures, and the Document Object Model (DOM). (Full article...) -

Image 9

Charles Babbage KH FRS (/ˈbæbɪdʒ/; 26 December 1791 – 18 October 1871) was an English polymath. A mathematician, philosopher, inventor and mechanical engineer, Babbage originated the concept of a digital programmable computer.

Babbage is considered by some to merit the title of "father of the computer". He is credited with inventing the first mechanical computer, the difference engine, that eventually led to more complex electronic designs, though all the essential ideas of modern computers are to be found in his analytical engine, programmed using a principle openly borrowed from the Jacquard loom. As part of his computer work, he also designed the first computer printers. He had a broad range of interests in addition to his work on computers, covered in his 1832 book Economy of Manufactures and Machinery. He was an important figure in the social scene in London, and is credited with importing the "scientific soirée" from France with his well-attended Saturday evening soirées. His varied work in other fields has led him to be described as "pre-eminent" among the many polymaths of his century.

Babbage, who died before the complete successful engineering of many of his designs, including his Difference Engine and Analytical Engine, remained a prominent figure in the ideating of computing. Parts of his incomplete mechanisms are on display in the Science Museum in London. In 1991, a functioning difference engine was constructed from the original plans. Built to tolerances achievable in the 19th century, the success of the finished engine indicated that Babbage's machine would have worked. (Full article...) -

Image 10SNOBOL (String Oriented and Symbolic Language) is a series of programming languages developed between 1962 and 1967 at AT&T Bell Laboratories by David J. Farber, Ralph Griswold and Ivan P. Polonsky, culminating in SNOBOL4. It was one of a number of text-string-oriented languages developed during the 1950s and 1960s; others included COMIT and TRAC. Despite the similar name, it is entirely unlike COBOL.

SNOBOL4 stands apart from most programming languages of its era by having patterns as a first-class data type, a data type whose values can be manipulated in all ways permitted to any other data type in the programming language, and by providing operators for pattern concatenation and alternation. SNOBOL4 patterns are a type of object and admit various manipulations, much like later object-oriented languages such as JavaScript whose patterns are known as regular expressions. In addition SNOBOL4 strings generated during execution can be treated as programs and either interpreted or compiled and executed (as in the eval function of other languages).

SNOBOL4 was quite widely taught in larger U.S. universities in the late 1960s and early 1970s and was widely used in the 1970s and 1980s as a text manipulation language in the humanities. (Full article...) -

Image 11

Rust is a general-purpose programming language. It is noted for its emphasis on performance, type safety, concurrency, and memory safety.

Rust supports multiple programming paradigms. It was influenced by ideas from functional programming, including immutability, higher-order functions, algebraic data types, and pattern matching. It also supports object-oriented programming via structs, enums, traits, and methods. Rust is noted for enforcing memory safety (i.e., that all references point to valid memory) without a conventional garbage collector; instead, memory safety errors and data races are prevented by the "borrow checker", which tracks the object lifetime of references at compile time.

Software developer Graydon Hoare created Rust in 2006 while working at Mozilla Research, which officially sponsored the project in 2009. The first stable release, Rust 1.0, was published in May 2015. Following a layoff of Mozilla employees in August 2020, four other companies joined Mozilla in sponsoring Rust through the creation of the Rust Foundation in February 2021. (Full article...) -

Image 12Atari BASIC (1979) for Atari 8-bit computers

BASIC (Beginners' All-purpose Symbolic Instruction Code) is a family of general-purpose, high-level programming languages designed for ease of use. The original version was created by John G. Kemeny and Thomas E. Kurtz at Dartmouth College in 1964. They wanted to enable students in non-scientific fields to use computers. At the time, nearly all computers required writing custom software, which only scientists and mathematicians tended to learn.

In addition to the programming language, Kemeny and Kurtz developed the Dartmouth Time-Sharing System (DTSS), which allowed multiple users to edit and run BASIC programs simultaneously on remote terminals. This general model became popular on minicomputer systems like the PDP-11 and Data General Nova in the late 1960s and early 1970s. Hewlett-Packard produced an entire computer line for this method of operation, introducing the HP2000 series in the late 1960s and continuing sales into the 1980s. Many early video games trace their history to one of these versions of BASIC.

The emergence of microcomputers in the mid-1970s led to the development of multiple BASIC dialects, including Microsoft BASIC in 1975. Due to the tiny main memory available on these machines, often 4 KB, a variety of Tiny BASIC dialects were also created. BASIC was available for almost any system of the era and became the de facto programming language for home computer systems that emerged in the late 1970s. These PCs almost always had a BASIC interpreter installed by default, often in the machine's firmware or sometimes on a ROM cartridge. (Full article...) -

Image 13

Computer class at Chkalovski Village School No. 2 in 1985–1986

The history of computing in the Soviet Union began in the late 1940s, when the country began to develop its Small Electronic Calculating Machine (MESM) at the Kiev Institute of Electrotechnology in Feofaniya. Initial ideological opposition to cybernetics in the Soviet Union was overcome by a Khrushchev era policy that encouraged computer production.

By the early 1970s, the uncoordinated work of competing government ministries had left the Soviet computer industry in disarray. Due to lack of common standards for peripherals and lack of digital storage capacity the Soviet Union's technology significantly lagged behind the West's semiconductor industry. The Soviet government decided to abandon development of original computer designs and encouraged cloning of existing Western systems (e.g. the 1801 CPU series was scrapped in favor of the PDP-11 ISA by the early 1980s).

Soviet industry was unable to mass-produce computers to acceptable quality standards and locally manufactured copies of Western hardware were unreliable. As personal computers spread to industries and offices in the West, the Soviet Union's technological lag increased. (Full article...) -

Image 14

The IBM Blue Gene/P supercomputer installation in 2007 at the Argonne Leadership Computing Facility located in the Argonne National Laboratory, in Lemont, Illinois, US

Fortran (/ˈfɔːrtræn/; formerly FORTRAN) is a third-generation, compiled, imperative programming language that is especially suited to numeric computation and scientific computing.

Fortran was originally developed by IBM with a reference manual being released in 1956; however, the first compilers only began to produce accurate code two years later. Fortran computer programs have been written to support scientific and engineering applications, such as numerical weather prediction, finite element analysis, computational fluid dynamics, plasma physics, geophysics, computational physics, crystallography and computational chemistry. It is a popular language for high-performance computing and is used for programs that benchmark and rank the world's fastest supercomputers.

Fortran has evolved through numerous versions and dialects. In 1966, the American National Standards Institute (ANSI) developed a standard for Fortran to limit proliferation of compilers using slightly different syntax. Successive versions have added support for a character data type (Fortran 77), structured programming, array programming, modular programming, generic programming (Fortran 90), parallel computing (Fortran 95), object-oriented programming (Fortran 2003), and concurrent programming (Fortran 2008). (Full article...) -

Image 15

Ada is a structured, statically typed, imperative, and object-oriented high-level programming language, inspired by Pascal and other languages. It has built-in language support for design by contract (DbC), extremely strong typing, explicit concurrency, tasks, synchronous message passing, protected objects, and non-determinism. Ada improves code safety and maintainability by using the compiler to find errors in favor of runtime errors. Ada is an international technical standard, jointly defined by the International Organization for Standardization (ISO), and the International Electrotechnical Commission (IEC). As of May 2023[update], the standard, ISO/IEC 8652:2023, is called Ada 2022 informally.

Ada was originally designed by a team led by French computer scientist Jean Ichbiah of Honeywell under contract to the United States Department of Defense (DoD) from 1977 to 1983 to supersede over 450 programming languages then used by the DoD. Ada was named after Ada Lovelace (1815–1852), who has been credited as the first computer programmer. (Full article...)

Selected images

-

Image 1A view of the GNU nano Text editor version 6.0

-

Image 2This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

-

Image 5Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

-

Image 6A head crash on a modern hard disk drive

-

Image 7Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

-

Image 8Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

-

Image 10Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

-

Image 12A lone house. An image made using Blender 3D.

-

Image 13Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

-

Image 14GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

-

Image 15Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

-

Image 16Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

-

Image 17An IBM Port-A-Punch punched card

-

Image 18Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

Did you know? - load more entries

- ... that a "hacker" with blog posts written by ChatGPT was at the center of an online scavenger hunt promoting Avenged Sevenfold's album Life Is but a Dream...?

- ... that the Gale–Shapley algorithm was used to assign medical students to residencies long before its publication by Gale and Shapley?

- ... that Cornell University's student-oriented programming language dialect was made available to other universities but required a "research grant" payment in exchange?

- ... that it took a particle accelerator and machine-learning algorithms to extract the charred text of PHerc. Paris. 4 without unrolling it?

- ... that Phil Fletcher as Hacker T. Dog caused Lauren Layfield to make the "most famous snort" in the United Kingdom in 2016?

- ... that the study of selection algorithms has been traced to an 1883 work of Lewis Carroll on how to award second place in single-elimination tournaments?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

No recent news

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus