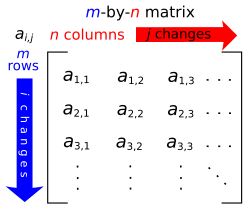

A singular matrix is a square matrix that is not invertible, unlike non-singular matrix which is invertible. Equivalently, an -by- matrix is singular if and only if determinant, .[1] In classical linear algebra, a matrix is called non-singular (or invertible) when it has an inverse; by definition, a matrix that fails this criterion is singular. In more algebraic terms, an -by- matrix A is singular exactly when its columns (and rows) are linearly dependent, so that the linear map is not one-to-one.

In this case the kernel (null space) of A is non-trivial (has dimension ≥1), and the homogeneous system admits non-zero solutions. These characterizations follow from standard rank-nullity and invertibility theorems: for a square matrix A, if and only if , and if and only if .

Conditions and properties

editOne of the basic condition of a singular matrix is that its determinant is equal to zero. If a matrix has determinant of zero, i.e. , then the columns are supposed to be linearly dependent. Determinant is an alternating multilinear form on columns, so any linear dependence among columns makes the determinant zero in magnitude. Hence .

For example:

if

here , which implies that columns are linearly dependent.

Computational implications

editAn invertible matrix helps in the algorithm by providing an assumption that certain transformations, computations and systems can be reversed and solved uniquely, like to . This helps solver to make sure if a solution is unique or not.

In Gaussian elimination, invertibility of the coefficient matrix ensures the algorithm produces an unique solution. For example when matrix is invertible the pivots are non-zero, allowing one to row swap if necessary and solve the system, however in case of a singular matrix, some pivots can be zero which can not be fixed by mere row swaps.[2] This imposes a problem where the elimination either breaks or gives an inconsistent result. One more problem a singular matrix produces when solving a Gaussian Elimination is that it can not solve the back substitution because to back substitute the diagonal entries of the matrix must be non-zero, i.e. . However in case of singular matrix the result is often infinitely many solutions.

Applications

editIn mechanical and robotic systems, singular Jacobian matrices indicate kinematic singularities. For example, the Jacobian of a robotic manipulator (mapping joint velocities to end-effector velocity) loses rank when the robot reaches a configuration with constrained motion. At a singular configuration, the robot cannot move or apply forces in certain directions.[3]

In graph theory and network physics, the Laplacian matrix of a graph is inherently singular (it has a zero eigenvalue) because each row sums to zero.[4] This reflects the fact that the uniform vector is in its nullspace.

In machine learning and statistics, singular matrices frequently appear due to multicollinearity. For instance, a data matrix leads to a singular covariance or matrix if features are linearly dependent. This occurs in linear regression when predictors are collinear, causing the normal equations matrix to be singular.[5] The remedy is often to drop or combine features, or use the pseudoinverse. Dimension-reduction techniques like Principal Component Analysis (PCA) exploit SVD: singular value decomposition yields low-rank approximations of data, effectively treating the data covariance as singular by discarding small singular values.[5]

Certain transformations (e.g. projections from 3D to 2D) are modeled by singular matrices, since they collapse a dimension. Handling these requires care (one cannot invert a projection). In cryptography and coding theory, invertible matrices are used for mixing operations; singular ones would be avoided or detected as errors.[6]

History

editThe study of singular matrices is rooted in the early history of linear algebra. Determinants were first developed (in Japan by Seki in 1683 and in Europe by Leibniz and Cramer in the 1690s[7] as tools for solving systems of equations. Leibniz explicitly recognized that a system has a solution precisely when a certain determinant expression equals zero. In that sense, singularity (determinant zero) was understood as the critical condition for solvability. Over the 18th and 19th centuries, mathematicians (Laplace, Cauchy, etc.) established many properties of determinants and invertible matrices, formalizing the notion that characterizes non-invertibility.

The term "singular matrix" itself emerged later, but the conceptual importance remained. In the 20th century, generalizations like the Moore–Penrose pseudoinverse were introduced to systematically handle singular or non-square cases. As recent scholarship notes, the idea of a pseudoinverse was proposed by E. H. Moore in 1920 and rediscovered by R. Penrose in 1955,[8] reflecting its longstanding utility. The pseudoinverse and singular value decomposition became fundamental in both theory and applications (e.g. in quantum mechanics, signal processing, and more) for dealing with singularity. Today, singular matrices are a canonical subject in linear algebra: they delineate the boundary between invertible (well-behaved) cases and degenerate (ill-posed) cases. In abstract terms, singular matrices correspond to non-isomorphisms in linear mappings and are thus central to the theory of vector spaces and linear transformations.

Example

editExample 1 (2×2 matrix):

Compute its determinant: = . Thus A is singular. One sees directly that the second row is twice the first, so the rows are linearly dependent. To illustrate failure of invertibility, attempt Gaussian elimination:

- Pivot on the first row; use it to eliminate the entry below:

= =

Now the second pivot would be the (2,2) entry, but it is zero. Since no nonzero pivot exists in column 2, elimination stops. This confirms and that A has no inverse.[9]

Solving exhibits infinite/ no solutions. For example, gives:

which are the same equation. Thus the nullspace is one-dimensional, then has no solution.

References

edit- ^ "Definition of SINGULAR SQUARE MATRIX". www.merriam-webster.com. Retrieved 2025-05-16.

- ^ Johnson, P. Sam. "Matrices and Gaussian Elimination (Part-1)" (PDF). NITK: 27 – via National Institute of Technology.

- ^ "5.3. Singularities – Modern Robotics". modernrobotics.northwestern.edu. Retrieved 2025-05-25.

- ^ "ALAFF Singular matrices and the eigenvalue problem". www.cs.utexas.edu. Retrieved 2025-05-25.

- ^ a b "Singular value decomposition and principal component analysis" (PDF). Portland State University: 1–3.

- ^ "Public Key Cryptosystem Based on Singular Matrix". Maxrizal: 1–3 – via Institut Sains Dan Bisnis Atma Luhur.

- ^ "Matrices and determinants". Maths History. Retrieved 2025-05-25.

- ^ Baksalary, Oskar Maria; Trenkler, Götz (2021-04-21). "The Moore–Penrose inverse: a hundred years on a frontline of physics research". The European Physical Journal H. 46 (1): 9. Bibcode:2021EPJH...46....9B. doi:10.1140/epjh/s13129-021-00011-y. ISSN 2102-6467.

- ^ "Row pivoting — Fundamentals of Numerical Computation". fncbook.github.io. Retrieved 2025-05-25.